1 ISP Overview

Internet popularity is driving demand for ever-faster service, and exerting downward pressure on price. Connection between end user and ISP is often called the last-mile. This implies there is a magical entity out there called “The internet” and customers are passive consumers of internet goodness. I prefer the term first-mile. It better denotes internet value being the result each person’s connection as both contributor and consumer. Today most citizens in industrialized countries have access to some form of high-speed access. Broadband is increasing seen as a utility without which citizens are unable to fully participate in modern society.

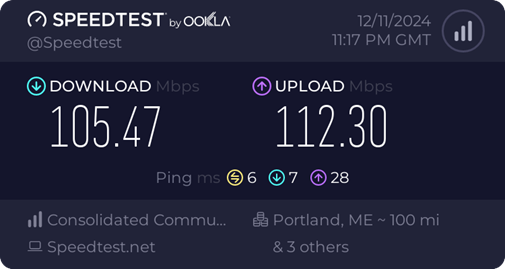

Broadband is a much abused and inexact term. The United States Federal Communication Commission is constantly redefining the definition of minimum broadband speed. In 2015 it was set to 25 Mbps download and 3 Mbps upload. In 2024 the definition was increased 100 Mbps download and 20 Mbps upload.

Most of us utilize an internet Service Provider (ISP) to access the internet. The ISP owns leases or otherwise has access to a connection to each customer. The picture below provides a high level overview of how ISPs connect customers to the internet.

Figure 1 ISP Functional Block Diagram

Connecting to an ISP would not have much value if the only people you can communicate with are other ISP customers. To provide worldwide connectivity ISPs connect to other ISPs at peering points. This allows traffic to be delivered anywhere in the world.

ISPs exert a great deal of control over how customers use the internet. Much is made of internet robustness and redundancy. That is true of the internet in general but for most of us the ISP acts as the on-ramp gatekeeper, limiting how it can be used. In most locations broadband competition is nonexistent or extremely limited. ISP business policy has significant impact on how customers use the internet and how new internet services are deployed.

There are several essential functions that must be provided by the ISP, as they are the only entity capable of doing so. There are many services, often associated with ISPs, which can be provided by anyone. The distinction between essential and non-essential functions is important when discussing Network Neutrality. As broadband access becomes more pervasive ISPs and policy makers need to balance business considerations with public interest.

Essential Core Functions

· Customer Connection

· Customer Authentication

· Customer Address Allocation

· Packet Routing

· Peering

· Multicast (IGMP)

· Quality of Service (QoS)

· Service Level Agreement (SLA)

· Acceptable Use Policy (AUP)

· CAELA

· Customer Support

· Billing

Non-Essential Services

· Name Resolution (DNS)

· Usenet

· Web Hosting

· Cloud Based File Storage

· Virtual Private Network

· Voice over IP

· Fixed Mobile Convergence

· IP Radio

· IP Television

1.1 Essential Core Functions

ISPs deliver a suite of services. When evaluating an ISP it is important to keep in mind which features are core functions, only the ISP can provide, and which are value add that can be provided by a third-party.

1.1.1 Customer Connection - Physical

First and foremost the ISP needs to provide a method for customers to access the ISP network.

Some ISPs own the First-Mile access network; Cable and fiber to the premise (FTTP) are examples of this type of ISP. The ISP owns and manages the outside plant customer connection. DSL ISPs typically rent physical access to legacy copper phone line from Incumbent Local Exchange Carrier (ILEC) and collocate their equipment in the phone company central office.

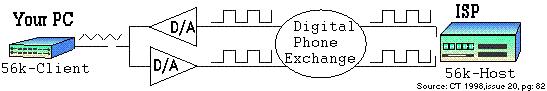

Dialup ISPs use the PSTN (Public Switched Telephone Network) to connect customers. The ISP creates regional points of presence (POP) near the customer so customer is able to call a local ISP telephone number, avoiding per minute charges. The ISP in turn digitally terminates phone lines to support V.90/92 dialup speeds.

Wireless ISPs both fixed and cellular do not provide a physical connection at all. Rather they obtain a government license to use the public airwaves to connect customers. This applies to both fixed and mobile wireless. The customer connects to the ISP’s radio network and traffic is then transported much the same as other ISPs.

Customer interface requirements differ greatly depending on type of service and whether or not the ISP provides the network access device. For example Cable and DSL ISPs typically provide the customer with a standard’s based modem router with an Ethernet or WiFi interface. Fiber optic service requires an ONT (optical network terminal) that converts the optical signals into copper Ethernet and optionally to legacy land line telephone and Cable TV. In the US T-1 carrier is a tariffed telecommunication service. The FCC defined customer interface as two pair copper circuit typically implemented via a smart-jack. Dialup ISPs require customer obtain a V.90/92 or ISDN modem. Fixed wireless ISPs typically supply and install customer antenna and radio. Cellular providers often provide a subsidized smart mobile phone when a customer signs up for service. However this trend is in decline with customers often able to purchase an unlocked phone on the open market.

1.1.2 Customer Connection – Logical

ISP provides either a routed or bridged customer connection. Residential accounts are typically bridged; customer connects to ISP as if they were part of the ISP LAN. VLAN techniques prevent users from seeing each other’s traffic. Business class accounts are typically routed rather than bridged. ISP’s edge router communicates with customer’s edge router. Routed connections are more flexible, but also more complex, then bridged.

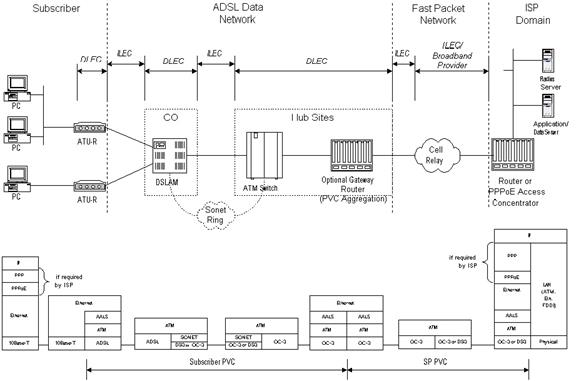

1.1.3 Authentication

The ISP needs a mechanism to insure only authorized customers connect to its network. For some types of service the link between customer and ISP is hardwired so any traffic appearing on the link is assumed to originate from customer. T1 and FTTP are typical hardwired connections. Shared media such as Cable and wireless need a way to identify customer. DOCSIS modems include a digital signature to prevent unauthorized access. ADSL ISPs typically use PPPoE (Point-to-Point Protocol over Ethernet) to authenticate customers. Telco’s like PPPoE because it facilitates support for third-party ISPs. Dial up ISPs typically utilize PPP (Point-to-Point Protocol) to authenticate customers.

1.1.4 Address Allocation

Each internet host requires a unique address. ISPs typically provide residential customers with a single IPv4 address. Large customers may obtain their addresses directly from ICANN (Internet Corporation for Assigned Names and Numbers) or from wholesale ISPs. IPv4 defines a 32-bit address space yielding about 4-billion possible addresses. That was a large number back when the internet was limited to a few educational and government institutions but has become a serious limitation today. As a result IPv4 addresses are in very short supply. Next generation internet protocol IPv6 increases address space to 128-bits, a truly humongous number. With IPv6 even residential customers are issued a large block of addresses. The transition to IPv6 has been glacially slow because it is not backward compatible with IPv4.

If the ISP does not have access to enough public IPv4 addresses they use CGNAT (carrier grade network address translation). Essentially the ISP performs the same operation as residential users use to share a single IP address among multiple customers.

IP addresses serve multiple functions. They denote a specific internet host; each host needs an IP address. IP addresses also facilitate routing because they are allocated in blocks. If IP addresses were issued randomly each router would need to potentially look through billions of addresses to determine how to handle each packet. By aggregating addresses into large blocks routers only need look at a few high order address bits to determine how to forward packets.

Business accounts are typically configured statically. Static allocation is preferred for commercial accounts. With a static address customer settings are configured manually, based on information provided off-line by the ISP. This eliminates possibility of address change interfering with remote access.

Most residential accounts obtain IP address dynamically. This is convenient because it eliminates need for non-technical customers to manually configure IP address, subnet mask, gateway address and DNS server address. Dynamically assigned address may change at any time making it difficult to operate servers.

1.1.5 IPv6 Support

February 2011 witnessed a

major milestone on the journey to mass deployment of IPv6, IANA made the final allocation of IPv4

addresses. This event has been long anticipated but having finally occurred

ought to spur more rapid deployment of IPv6, the successor to IPv4. IPv6

represents a significant improvement over IPv4 but adoption has been painfully

slow. The reason is IPv6 is not backward compatible with IPv4. This is because IPv4

has a 32 bit address space supporting approximately 4 billion hosts (4.3 x 109)

IPv6 uses 128 bits for a mind boggling 340 Undecillion hosts (3.4 x1038).

The massive address space allows large blocks of address to be allocated, thus

easing routing and management.

Since IPv6 is not backward compatible ISPs offer a number of ways to support the transition.

1) Dual-Stack is probably the easiest to understand. The ISP provides customer with both IPv4 and IPv6 addresses. Customer equipment uses the appropriate version to communicate with the remote host, preferentially using IPv6. The down side of this implementation is the need to provide the customer with a public routable IPv4 address and the customer network gear has to support both IPv4 and IPv6. The lack of IPv4 addresses is why it is imperative the internet adopt IPv6.

2) Dual-Stack lite The ISP provides only IPv6 addresses to customers, all traffic between customer and the ISP network is IPv6. When a customer accesses an IPv4 internet host the customer’s router encapsulates the IPv4 address and transports it over the IPv6 connection to the ISP. The ISP uses CGNAT (carrier grade NAT) much like the way a typical home network shares a single IP address today. Customer’s router disencapsulates IPv4 packets and distributes IPv4 and IPv6 packets within the LAN. Just to keep life interesting the term CGN has been depreciated and it is now called large scale NAT (LSN) to more accurately reflect what the technique does.

3) Tunneling (6in4) is a way for IPv6 packets to be transported over an IPv4only network to another IPv6 network. This is probably not of interest for most readers of this paper but is very useful for companies with many locations that have adopted IPv6 internally.

A significant force driving IPv6 adoption is the cellular phone network, especially outside the US where the IPv4 shortage is more acute. The proliferation of smart phones means the network needs to hand out an IP address per person rather than per residence greatly increasing the number of addresses needed.

1.1.6 Packet Routing

The term internet is a contraction of inter network. Internet is literally a network of networks. Routers are used to forward packets between networks. Devices know whether or not a host they are trying to reach is local. To access a remote host packets are forwarded to a router, called a gateway, attached to the local area network LAN. The router uses its knowledge of connection topology to make intelligent forwarding decisions. This process is repeated multiple times until packet finally reaches its ultimate destination. Routers learn connection topology by exchanging routing information. In the case of most residential customers this forwarding decision is trivial as there is only one connection to the internet.

1.1.7 Transit Network

Signing up with an ISP would not be very useful if customer was limited to only communicating with other customers of the same ISP. The early internet consisted of a few nodes interconnected by point-to-point links rented from the old Bell System. As the internet grew it became apparent there was a need for a high-speed data network to interconnect high usage nodes. Transit providers span continents and oceans providing the backbone. Transit providers exchange traffic with each other and accept traffic from ISPs. Large companies, ISPs and governments often connect directly to one another, called Peering, eliminating the need to use a transit provider for some traffic. Smaller ISPs purchase bandwidth from third party wholesale suppliers. The end result is regardless how one connects it is almost always possible to communicate with anyone else on the internet.

This drawing is very simplified; typically all but the smallest ISP will have multiple connections to various transit providers and often peering connections to other ISPs. Routing protocols chose the best route to deliver each packet. One of the network neutrality concerns is that ISPs will choose less congested routes for partners resulting in slower performance if a customer is accessing a non-preferred site.

Figure 2 Peering

1.1.8 Multicast (IGMP)

Internet is a powerful communication medium. A user is able to connect to another host anywhere in the world virtually instantly. As powerful as this type of communication is it is not well suited for broadcast, the delivery of one program to many subscribers simultaneously. Traditional broadcast business model grew out of the technical limitation of radio. Station owner built a transmitter and anyone within range was able to receive the broadcast.

The one-to-one connection model used by the internet makes it difficult to cost effectively broadcast programs since each listener requires a unique network session. IGMP (internet group management protocol) creates the infrastructure to deliver a single stream to multiple users. At each branch a decision is made whether or not to forward the stream. If an active listener is downstream packets are forwarded, if not they are dropped. This conserves channel capacity by suppressing streams no one is listening to. IGMP dramatically reduces server load since only a single copy is transmitted. Internet broadcasting is still in its infancy and IGMP is not commonly implemented by ISPs. For multicast to function each router between sender and receiver needs to support IGMP.

1.1.9 QoS (Quality of Service)

The internet is an egalitarian best effort network. This works amazing well for transferring large chunks of data from point A to point B. The network continues to operate in the presence of all sorts of impairments and failures. However: best effort does not work well with latency critical applications such as telephony and streaming media when dealing with network congestion. For example a Voice over IP (VoIP) phone call requires round trip latency under 150ms. Excessive delay makes carrying on a conversation difficult and when extreme virtually impossible. On the other hand if a print job is delayed a little no one is likely to notice as long as it completes successfully.

When a switch or router encounters congestion it buffers incoming packets until it is able to forward them. Normally this occurs on a first in first out (FIFO) basis. Quality of Service (QoS) metric allows latency sensitive packets to receive priority queuing. This simple strategy works well if latency critical traffic is a small percent of total. QoS marks packets with a (Diffserv) priority level. When congestion occurs higher value packets are delivered first. Lower value packets are delayed or discarded during periods of extreme congestion. QoS service allows more graceful degradation by moving high priority packets to the head of the queue.

As discussed in a later section traffic shaping and preferential packet treatment is controversial. Network Neutrality proponents are concerned ISPs will strike business deals with partners to preferential deliver their data at the expense of competitors. It is important to remember Quality of Service mechanisms do not provide additional channel capacity. They simply redefine winners and losers. When channel capacity does not meet “offered load” (an old telecom term) some policy must be in place to deal with congestion. The PSTN managed congestion by withholding dial tone or returning an “all trunks busy” message when calls could not be completed. The internet handles congestion by delaying packets or in extreme cases dropping them. QoS controls which packets get delayed. Many argue deploying additional capacity is more cost effective then implementing a complex differential service mechanism.

To be maximally effective QoS requires end-to-end deployment. Technical and business problems facing QoS is much the same as IGMP. There is little value until “everyone” deploys it and little incentive to be an early adopter. ISP and all intermediate nodes need to monitor packet privilege level and treat them accordingly. Controls at each level need to monitor statistics to prevent “tragedy of the commons.” If too many packets ask for priority handling they all suffer.

Historically most residential broadband service is asymmetric; download is much faster than upload. There is benefit in shaping upload traffic so higher priority traffic is treated preferentially at the edge of the customer’s network. Customer’s edge router examines outbound packets and prioritizes them. Many residential routers already do this to a limited extent giving TCP/IP ACKs preferential treatment. Similar treatment may be applied to VoIP or critical gaming packets.

1.1.10 SLA (Service Level Agreement)

One of the main differences between residential and business accounts is the SLA (Service Level Agreement). SLA defines things like: minimum speed, maximum latency, service reliability and mean time to repair. The SLA imposes performance guarantees ISP must meet and penalties if they do not. This is one of the reasons business class service is so expensive. Residential accounts are typically best effort. If connection fails or experiences congestion ISP is under no obligation to correct problem on an expedited basis.

1.1.11 AUP (Acceptable Use Policy

AUP (Acceptable use policy) defines customer responsibility, how service may be used and penalty for misuse. For example, residential customers are typically prohibited from reselling access or running servers and ISP’s often block certain types of traffic. In an attempt to reduce cost some residential ISPs impose usage caps to limit monthly download and upload. Most ISP’s reserve the right to revise the AUP at any time making for a pretty one-sided contract.

1.1.12 CALEA (Communication Assistance for Law Enforcement Act)

CALEA passed in 1994 and has been greatly expanded over the years. It requires the ISP to install special equipment to facilitate wiretapping of customer’s digital traffic by law enforcement. Originally it was limited to voice traffic but has been expanded to include all ISPs. There is a lot of pressure on ISPs to retain customer web browsing history and to make it available to law enforcement and antiterrorism agencies. This has been especially prevalent in Europe but is also happening in the US.

1.1.13 Technical Support

Regardless of how good service is on occasion will be necessary to contact technical support to resolve problems. Tech support responsiveness dramatically affects overall customer satisfaction.

Most residential broadband providers offer only limited help in troubleshooting problems. Finger pointing can be frustrating when a customer is trying to resolve a complex interaction and ISP does not consider it their responsibility. Specialized web sites such as DSLReports can be an effective alternative. DSL Reports is a good example of an internet community; members post questions and assist each other in dealing with network issues.

1.1.14 Billing

ISPs would not stay in business long if they could not charge for service. During the Dotcom era some dialup ISPs offered advertising supported free access, those companies are long gone.

Most ISPs offer flat rate billing based on speed tier. Monthly cost is based on connection speed not how much the service is used. Some ISPs set monthly bandwidth consumption quotas, exceeding monthly cap results in an extra charge or a reduction in speed. Caps are controversial because usage measurements tend to be inaccurate and they have little to do with the cost of providing service. Caps are pretty common for Cable and wireless providers, often imposing a significant surcharge for over use. Andrew Odlyzko has written extensively about customer pricing preferences – what people are willing to pay for and how they prefer paying for it.

There is no comparable notion of telephone long distance in the internet world. It does not cost any more to access a web site cross the street as around the world. Back in the early days of telephone it was very difficult and expensive to transport calls over long distances. The advent of fiber optic technology has reduced transmission cost so it represents only a small fraction of the cost to deliver internet access. The distance independent paradigm of the internet is changing how traditional telephone calls are billed. By way of example the phone service provided by our ISP does not impose per minute charges for domestic or Canadian phone calls nor does our cell phone provider.

1.2 Common But Non-essential Services

This section examines services often provided by ISPs but that can be provided by third parties or in some cases even the customer. This distinction is important in the Network Neutrality debate. If an ISP decides to offer a non-standard or value-add service and customer or a third party is able to supply a similar service the impact is dramatically different than if the ISP implements a proprietary core service.

1.2.1 DNS (Domain Name System)

I struggled with whether to put DNS in the essential or non-essential session.

DNS (Domain Name System) translates URL (Uniform Resource Locator) to IP address. Without DNS web sites would have to be accessed by IP address. DNS is unique in that it is the only fully distributed database in existence. DNS name space is evaluated right to left. Naming convention begins with an implied “.” at the extreme right of the top level domain (TLD), the root domain. Next in the hierarchy are the TLDs (com, gov, edu, uk, ru), then registered domain name (tschmidt is my registered domain within the .com top level domain), then one or more sub domains. As each level is traversed it provides information about the next lower level until ultimately the IP address of the particular host server is determined.

If DNS is unable to resolve a domain name it returns an error message. Some ISPs have attempted to monetize incorrect URL entry by returning advertising supported web page if the URL cannot be resolved. DNS redirection is controversial. Some customers may find redirection useful, other not.

There are lots of public DNS servers available if you do not like the one provided by your ISP. They can also be handy for troubleshooting if your ISP is experiencing DNS problems. For many years I used the popular TreeWalk program to run my own DNS resolver. The web site has expired so I can no longer recommend using it. Gibson Research has a handy DNS benchmarking tool to test performance of multiple resolvers.

So why did I say I struggled with this topic, since it is obvious you do not have to use your ISP’s DNS server? The issue is CDN (content distribution networks). CDNs cache content physically close to the end user, sometimes even at the ISP data center. Using a DNS resolver other than the one provided by your ISP can actually degrade performance. This is because the DNS server will be unaware of any private arrangements between the ISP and CDN and the physical location of the public DNS server is likely significantly different then the ISP DNS. The result is the public DNS server will return the IP address of a non-optimum CDN caching edge server degrading performance.

1.2.2 E-Mail

It used to be common for an ISP to provide email. It is wise to consider an ISP e-mail account a throwaway. If you change ISP’s or the ISP is sold your email address changes making it difficult for folks to stay in touch. With the available of third party email services some ISP’s no longer offer email. For a more permanent address use one of the free e-mail services such as Yahoo or Gmail or better yet register your own domain.

One useful way to use ISP email is for home automation devices. We have several that send notification emails, either at a fixed time of day or due to certain events. Sending these emails from your ISP account to another email account is a great way to verify both are operating properly.

1.2.3 Usenet

Usenet Newsgroups are text based and predates the web so have fallen out of favor. Most ISPs used to include Usenet access. Due to declining interest in Usenet and legal attacks related to pornography and copyright issues many ISPs have eliminated support. Usenet access is available from a number of specialized companies. Usenet Compare has a nice comparison list of newsgroup providers.

1.2.4 Web Hosting

Some ISPs provide web site hosting for residential customers. This allows customers to have an internet presence without having to register a domain name or run their own web server. ISP runs a virtual server enabling many low traffic web sites to run on a single computer. ISP web hosting is a boon to residential customers by providing a painless way to create a web presence. As with email use of the ISP web server binds customer’s web site to the ISP. There are many hosting alternatives that decouple personal web sites from the specific ISP.

1.2.5 Cloud File Storage

The cloud is the current

buzzword for outsourcing services over the internet. Many ISPs offer some form

of network storage either as part of the plan or as an extra cost add on.

Storing your information over the internet means you can access it from

anywhere without the need to run your own server and if your house burns down

or computer crashes your data is safe. On the other hand the fate of your data

is in the hands of others.

The cloud is the current

buzzword for outsourcing services over the internet. Many ISPs offer some form

of network storage either as part of the plan or as an extra cost add on.

Storing your information over the internet means you can access it from

anywhere without the need to run your own server and if your house burns down

or computer crashes your data is safe. On the other hand the fate of your data

is in the hands of others.

![]()

1.2.6 VPN (Virtual Private Networking)

A VPN uses the public internet to create private communication paths. Depending on how it is implemented it may be a feature that only the ISP is able to deliver or something the customer or a third-party is able to engineer. Once the province of large companies VPNs are attractive for any customer that needs to securely access their network remotely.

Another benefit is using a third party VPN is they offer location services allowing you to appear to be located anywhere in the world.

Large companies make extensive use of MPLS to implement a geographically dispersed corporate LAN. To users, regardless of location, resources appear to be on the LAN. Service provider configures edge routers such that data presented to it is delivered to the correct physical location. ISP isolates each company’s traffic so in is invisible to other companies.

Figure 4 MPLS VLAN

It is also possible for customers to create their own VPN using IPsec. In this case customer, rather than service provider, creates a secure end-to-end path through the public internet. IPsec is used extensively to support satellite offices and telecommuters.

SSL/TSL is another mechanism used to provide end-to-end privacy. SSL was originally developed by Netscape to protect web based financial transactions. Because it is built into all browsers many companies are using it, rather than IPsec, to provide remote employee access.

1.2.7 VoIP (Voice over IP)

The PSTN (public switched telephone network) represents over a hundred years of engineering. Packet based telephony has become a serious contender. Rather than traditional circuit switching VoIP uses packet-based communication to deliver two-way real time voice. Voice communication is very demanding. Voice data rate is low by internet standards only 8-64 kbps in each direction. However latency is critical. If packets are delayed more than a few hundred milliseconds voice quality is seriously degraded.

Figure 5 Voice over IP

If the ISP offers VoIP check the service thoroughly. The asymmetric nature of most residential service, upload being much lower than download, makes it easy to saturate the connection. Quality of Service (QoS) may be required to mark VoIP packets, as high priority so they get preferential treatment. Various encoding schemes are used by VoIP that may degrade voice quality compared to traditional phone service. The local Telco that provides our fiber based internet service also provides landline telephone service over the same fiber using VoIP. Over the several years we have had fiber phone service has been fine.

1.2.7.1 Number Portability

In the US the FCC mandates telephone number portability. In most cases you will be able to transfer an existing wire-line or cellular phone number to new service provider.

When we replaced DSL with fiber internet took advantage of number portability to transfer our landline phone number we have had for years. Similarly we have taken advantage of number portability when we have switched cellular providers.

Typically when transferring a phone number you need to contact your current service provider to obtain a security code to prevent Slamming the illegal switching of telephone service.

1.2.7.2 E911

Voice over IP represents challenges for E911 emergency service. Unlike wire-line POTS (plain old telephone service) where telephone location never changes, a VoIP call can originate anywhere. Cellular networks have struggled for years to implement E911 service using triangulation or GPS to locate subscribers. When we moved our landline to VoIP as part of the transition to fiber needed to update location information for 911 response.

1.2.7.3 Fixed Mobile Convergence

There is interest in multimode cellular phones able to utilize both traditional cellular network and opportunistically, WiFi networks. Fixed Mobile Convergence represents a win-win situation for both customer and wireless provider. For providers it utilizes the vast potential of the internet and private LANs to remove traffic from expensive cellular radio networks. For customer it represents potentially lower cost and improved performance. For business it represents a way to eliminate traditional PBX wired telephone infrastructure without paying extravagant per minute charges. Depending on national legal restrictions it may offer arbitrage advantage for multinational corporations to treat voice like email, bypassing local phone companies and eliminating per minute charges.

Figure 6 Fixed Mobile Convergence

1.2.7.4 Femtocells

An alternative to WiFi calling is femtocells being offered by several Cellular phone companies. Femtocells are low power cellular base stations that utilize a customer’s broadband connection to deliver coverage to a single home. As with WiFi Cellular providers like it because it moves traffic off cell stations.

Femtocell should not be confused with Cellular repeaters (boosters). Boosters simply amplify radio signals between a cell phone and cell tower. Installation typically involves mounting an outdoor antenna pointed toward the desired cell tower and an indoor antenna located to increase coverage within the residence and not cause interference with the outdoor directional antenna.

WiFi calling is built into our Android phones that is handy here in terrain challenged NH where we have poor cell phone coverage at home.

1.2.7.5 Roaming

A difficult problem is seamless roaming between networks. To a limited extent this is already being done by WiFi as a user moves between Access Points. However for this to work all APs must be under the same administrative control. In an ideal world a device associates with a network and as it moves it automatically reconnects to the best network at the new location seamlessly without any interruption in service. As an example imagine a user beginning a WiFi session at home, gets in their car and moves out of range and is handed off to the Cellular network. They stop for breakfast and are back with range of a different WiFi network, and lastly they arrive at work and now join the corporate LAN. The IEEE 802.21 media independent handover services working group tackled this difficult problem.

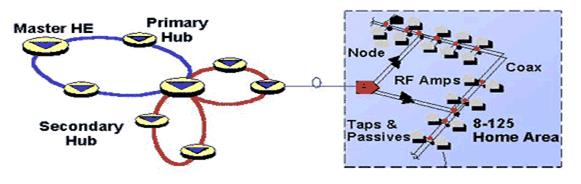

1.2.8 IP Television

Over the Air, Cable and DBS TV all use basically the same transmission scheme. RF spectrum is divided into channels. US TV channels are 6 MHz wide, in Europe 8 MHZ. Channels were initially specified to carry a single analog standard definition TV program. Migration to digital transmission allows each channel to carry multiple high definition (HDTV) and/or standard definition programs (SDTV).

IPTV sometimes call OTT (over the top) represents a fundamentally different way to deliver TV leveraging packet-based technology. IPTV opens the door to demand based programming. The traditional broadcast model is one-to-many, an artifact of radio transmission. Once a transmitter is set up anyone within range is able to receive the program. Video on demand (VoD) is like going to the library, rather than changing channels. One simply selects the program of interest and it is delivered virtually instantly anywhere anytime to any device the end user chooses.

Using MPEG-2 compression SDTV requires about 2 Mbps and HDTV 15 Mbps. MPEG-4 yields significantly lower data rates for equal image and sound quality. These rates are the result of spectral (within the picture) and temporal (over time) data compression. Raw data is much too high to be delivered economically.

Video on demand represents many challenges compared to traditional broadcast. Each user is able to start/stop the program at any time requiring a discrete program feed to each user rather than a single feed to all users as with broadcast.

Figure 7 IP TV

Historically residential ISPs assumed a customer traffic model of primarily bursty download traffic such as loading web pages or accessing email. Streaming video and to a lesser extent streaming audio lock up significant bandwidth for extended periods of time. This is much more demanding than browsing.

IPTV dramatizes the disruptive nature of the internet. Since the end of WWII Cable companies have wired areas to deliver broadcast TV over coax and more recently hybrid fiber coax networks. The Cable network is intimately bound to TV delivery. As residential broadband speed increases the door opens for new providers to bundle content and deliver it without the need to either build or own the means of local delivery.

1.2.9 IP Radio

ISPs do not appear much interested in becoming content aggregators for radio the way they are for TV. But other than much lower bandwidth the requirement for internet radio is not much different than internet TV. Many broadcast FM stations also have a streaming service. Radio-Locator is a convenient way to find radio stations.

1.3 Content Delivery Network (CDN)

Basic to the design of the internet is the notion of direct end-to-end communication. When Computer A wants to exchange data with Computer B routers between the two move packets the most efficient way they can on a packet by packet basis. The popularity of streaming video services like YouTube and Netflix stresses the network as millions of users access the same content from diverse locations.

Figure 8 Content Delivery Network

Video is very bandwidth intensive. Video on demand requires a one-to-one connection between user and server as opposed to the one-to-many model of traditional broadcast. Being demand based each user may be viewing a different program or different time within the same program. To address the interest in VoD (video on demand) specialized service providers called CDN (Content Delivery Network) have become popular. The CDN replicate programs on many caching servers and locates them near the ultimate end user. Often they have special peering arrangements with large ISPs or are located within the ISP’s data center itself. CDNs reduce the amount of traffic flowing over internet transit network because they are able to source the file near where it is being viewed. When a customer requests a particular program the ISP’s DNS servers return the address of the local caching server to most efficiently stream the program to the customer.

1.4 Connection Sharing

Historically when a customer contracted with an ISP they were given a block of IP addresses large enough to meet their needs. The IPv4 address shortage forced ISPs to rethink how they allocate addresses. Most residential broadband ISPs restrict customer to a single IPv4 address. This creates a quandary; how to cost effectively connect multiple hosts to the internet? The most common workaround is NAT (Network Address Translation) coupled with use of private IP addresses. RFC 1918 reserves three blocks of IP addresses guaranteed not used on the internet. Because these addresses are not used on the public internet they can be reused multiple times.

Combining NAT, more properly Network Address Port Translation since both address and port number are modified, and private addresses allow a virtually unlimited number of computers to share an internet connection even though the ISP only provides a single address. NAT provides translation between private addresses on LAN and single public address issued by ISP on WAN.

NAT only affects non-local communication. When a request cannot be serviced locally it is passed to the NAT router, called a gateway. The router modifies packets by replacing private address with public address issued by ISP and if needed modifies port number to support multiple sessions and calculates a new checksum. The router sends the modified packet to remote host as-if-it-originated-from-the-router. When router receives the reply the modifications are reversed and the packet forwarded to the originating host. Router tracks individual sessions so multiple computers are able to share a single address. From the internet’s perspective local hosts are invisible. The router looks like a single computer with the address of the public IP issued by the ISP.

IPv6, with its vast address range, does not require NAT. Each device will have its own public IP address. This changes the nature of residential routers. NAT, though not technically a firewall, blocks all incoming connection requests from remote hosts. Unless specifically programmed with port forwarding rules it does not know which device on the LAN to forward the request. This default behavior is lost with IPv6. Residential routers that support IPv6 should block incoming connection requests unless specifically programmed otherwise.

1.5 Carrier Grade NAT

The IPv4 shortage is so severe that some ISP’s are not able to obtain enough public addresses to support their customers. CGNAT works much like end user NAT but uses a different reserved block of IP addresses RFC 6598. In general this is transparent to the customer unless they want to operate a server.

1.6 Blocked Ports

The internet is designed as a transparent end-to-end bit delivery network. This means any host is able to communicate with any other host. TCP/IP and UDP/IP use ports so a host is able to manage multiple simultaneous sessions. Port numbers are 16-bit unsigned values yielding up to 65,535 ports for each connection type. When a service is defined a port number is selected for initial contact. This is called the well-known port. For example the well-known port for HTTP Web access is 80. When a remote user attempts to connect it sends the request to TCP port 80. Once the initial connection is established both computers agree to a use a different combination of ports for ongoing communication. An analogy is to think of well-known port as a doorbell. If ISP blocks access to well-known port remote users are unable to connect.

It is common practice for residential ISPs to block incoming port 80 to prevent customers from running web servers, port 25 to send email to prevent spam, and ports 137, 138, 139, and 445 to prevent remote access to Windows LAN based SMB file sharing. In an effort to reduce file trading some ISPs throttle or block ports used for peer-to-peer file trading applications. Impact of blocked ports varies.

To get around blocked port it is easy to reconfigure the server to use a non-standard port. If access is limited to a small group of friends it is easy enough to simply inform everyone which port to use. If goal is wider public access use of nonstandard ports is a problem. Without knowing the port number remote users are unable to connect. URL forwarding is a technique to work around this restriction.

1.7 Traffic Shaping

The internet is as an egalitarian best effort network. This means as packets arrive they are processed on a first come first serve basis. With enough channel capacity incoming packets never have to wait.

Residential ISPs make assumptions about typical customer usage when they set monthly charges and designed infrastructure. Business model assumed bursty data flow predominantly web browsing, email, and occasional file download. Proliferation of Peer-to-Peer file trading and streaming video services, such as YouTube and IPTV upset these assumptions. ISPs are struggling to carry more traffic than originally planned.

Some ISPs are responding with traffic quotas. When customer exceeds quota either speed is reduced or additional charge incurred. There have been numerous stories of unwitting customers being billed for thousands of dollars in overage charges on their cell phone data account. On the other hand some ISPs detect undesirable traffic and throttle speed rather than blocking it entirely.

1.8 Usage Caps

ISPs often justify usage based pricing as a way to control congestion; however congestion is a temporal phenomenon having little to do with aggregate usage. Congestion only occurs when instantaneous demand exceeds capacity. As has been well documented usage caps are really being used to generate additional revenue or to protect legacy business models.

The other common complaint is the measuring technique is not very accurate.

1.9 Digital Rights Management

The proliferation of digital media devices and networking is making the traditional media world nervous because digital technology allows rapid lossless copying. From a technology standpoint the DRM (digital rights management) mechanisms used to prevent this have been a spectacular failure and in some cases have actually caused damage to end-user devices.

If you or someone on your network is found to be violating copyright law the owner will notify the ISP and the ISP will in turn notify you of the violation.

1.10 Deep Packet Inspection

Some ISPs use a technique called DPI (Deep Packet Inspection) to determine how customer is using the internet and block or throttle use they deem harmful. DPI can also be used to obtain additional information about customer’s internet usage. This data is of interest to targeted marketing vendors. The use of DPI falls into a grey area of what is and is not acceptable ISP behavior. In addition many governments want to know about what their citizens are doing and press ISPs to track customer usage.

1.11 Latency vs Speed

In the quest for ever-faster speed it is important not to lose sight of the interplay between speed and latency. As an example a truck carrying DVDs exhibits very high speed (bits per second) once it arrives but also high latency because it takes hours or days for the data to arrive. Round trip latency is defined as time it takes a packet to go from source to destination and back again. Factors affecting latency are: connection speed, modem overhead, distance, propagation speed, and network congestion.

Modems operate on “chunks” of data increasing latency because entire block must be processed before being passed to next stage. Data cannot be used until the last bit in the bock is received. Low speed connections such as dialup often use smaller packet size to minimize this effect.

Light travels 186,000 miles per second in vacuum. Optical fiber is somewhat slower about 70% of light in vacuum. A packet traveling the 3,000 from New York to LA takes about 25 ms in each direction. To this one must add delay at each router between source and destination. Normally this delay is negligible but if network becomes congested router must temporally store incoming packets until the outgoing path is free. In extreme cases router will discard packets. When packets are lost upper level protocol either requests retransmission (TCP/IP) or in the case of streaming data (UDP/IP) the receiver has to fake missing data.

Impact of latency is heavily dependent on data type. Interactive use such as gaming and VoIP telephony place stringent demands on round trip latency but do not require much bandwidth. File transfer on the other hand is relatively insensitive to latency but places great importance on speed.

Typical first-hop latency varies due to transmission speed and distance: T1 or FTTP 1ms, Cable/DSL 5-30ms, Dialup 100 ms, Geosynchronous Satellite 500ms. For a more in-depth explanation see “It’s the Latency Stupid.”

1.12 Asymmetric Speed

Many residential ISPs provide asymmetric speed: download is much faster than upload. This is done for technical and business reasons. Asymmetric speed allows ISP to position residential service differently than business and charge higher fee for business class service. With the proliferation of residential fiber symmetric download and upload has become more common.

Low upload speed makes it difficult to run a server or use Voice over IP since upload pipe is easily saturated.

1.13 Measuring Speed

End user LAN is rarely the determinate of internet speed as wired and wireless LAN performance normally exceeds internet access speed. Speed is typically limited by first-mile WAN connection. It can be a challenge teasing out various components of end-to-end performance to see if ISP is working as advertised.

IP transmission splits data into 1500 byte chunks called packets (1-byte = 8-bits). Some of the 1500 bytes are used for network control so are not available for user data. TCP/IPv4 uses 40 (TCP/IPv6 60 bytes) of the 1500 bytes for control. NOTE: this analysis assumes use of maximum size packets. Since overhead is fixed using smaller packet incurs higher overhead percentage. With 40-bytes reserved for control out of every 1500-bytes sent only 1460 are available for data. This represents 2.6% overhead.

Some ISPs, typically phone companies, use a protocol called Peer to Peer Protocol over Ethernet (PPPoE) to transport DSL data. This is an adaptation of PPP used by dialup ISPs. Telco’s like PPPoE because it facilitates support of third party ISPs as mandated by the FCC. PPPoE appends 8-bytes to each packet increasing overhead to 48-bytes reducing payload to 1452. Where PPPoE is used overhead is increased to 3.2%.

DSL connections typically use Asynchronous Transfer Mode (ATM) (AAL5) to carry DSL traffic. ATM was designed for low latency voice telephony. When used for data it adds significant overhead. ATM transports data in 53-byte Cells of which only 48 are payload the other 5 are control. Each 1500-byte packet is split into multiple ATM cells. A 1500-byte packet requires 32 cells (32 x 48 = 1,536 bytes). The extra 36-bytes are padded, further reducing ATM efficiency. 32 ATM cells require modem transmit 1,696 bytes of which only 1452 carry payload. Where ATM/PPPoE is used overhead is increased to 14.4%.

TCP/IP overhead 2.6% efficiency 97.4%

TCP/IP/PPPoE overhead 3.2% efficiency 96.8%

TCP/IP/PPPoE over ATM overhead 14.4%, efficiency 85.6%

NOTE: This is best-case speed. Errors, transmission delays, etc. will reduce speed from this value. The higher the speed the greater the impact of even modest impairments on thru put.

It is easy to determine best-case file transfer rate if modem data rate is known. Broadband marketing rate may not the same as modem transfer rate. Some ISP’s set transfer rate higher than marketed speed to compensate for overhead. That way speed test result will be close to marketed speed. Most broadband modems have status page allowing user to observe true transfer rate. This is the rate modem connects to ISP not speed computer connects to modem or router which is typically 10 Mbps, 100 Mbps or 1 Gbps.

By way of example our Fidium fiber internet is marketed as 100/100 Mbps.

Actual speed test result reported bySpeedtest.net shown below.

Figure 9 Speed Test Result

1.14 Speed Optimization

TCP requires receiver periodically send an Acknowledge to let sender know everything is OK. If the transmitter has not received acknowledgement after it sends a number of packets it stops transmitting and waits. This is called the receive window. For high speed connection or where latency is high default receive window (RWIN) should be increased to prevent pauses in transmission. Most modern Operating Systems do a good job optimizing RWIN so little is gained by changing it.

If router supports QoS having it give ACKs priority will improve file transfer rate if upload becomes congested.

The other important tweak is packet size, called the MTU (maximum transmission unit). Maximum packet size is typically limited to1500 bytes. Normally this setting is fine for broadband access, dialup uses a much lower MTU typically 576. PPPoE encapsulation adds 8 bytes to each packet. This reduces maximum packet size to 1492 bytes. If sender attempts to transmit a larger packet it will either be rejected or fragmented into two parts, with attendant performance degradation.

With the available of cheap high speed memory a phenomena called Buffer Bloat, unnecessarily large buffers in network routers. The large buffers result in overly long queuing delays when the network is congested.

1.15 Load Balancing vs Bonding

If one link is not able to deliver adequate speed the obvious solution is to add links. There are two ways to manage multiple links load balancing and bonding.

With load balancing a router with multiple WAN ports is used to share the load. As connection requests come into the router from the LAN it determines which link to use based on link capacity and loading. A given session is constrained by the speed of whichever link it is assigned. Aggregate performance is increased because the router parcels out requests to all the links. A typically web page consists of dozens of separate HTTP sessions to different servers. Load balancing will help in that case. If you are downloading a video load balancing will have no effect.

Bonding is transparent to IP it looks like a single faster pipe. Bonding requires cooperation between the ISP and customer where load balancing can be performed unilaterally by the customer. In the case of DSL bonding is typically performed by the ATM layer that splits data among multiple ATM streams. DOCSIS3 modems do something similar allowing the ISP to allocated more than one channel for internet delivery.

While bonding is able to dramatically improve speed based on the number of connection it has little if any effect on latency. The reason is the modem processing that must occur at each end is the same and even though it is invisible to IP bits need to travel over multiple paths and be reassembled before they are handed off to IP.

1.16 Servers and Dynamic IP Allocation

Most residential accounts are configured automatically each time customer connects. Dynamically assigned IP address makes it difficult to run a server because address may change at any time preventing remote users from connecting until they learn new address. Dynamic DNS service provides a workaround to run servers on dynamic accounts. A daemon runs on either the router or server to detect address changes. When a change occurs it notifies DNS service which in turn automatically updates A records for the site. Even with automatic DNS update there will still a period of time after the address changes where server is not accessible and active sessions are aborted. Dynamic DNS services are really only suitable for casual personal servers, not business use.

1.17 When “Unlimited” Doesn’t Mean “Unlimited”

There has been much press about residential and cellular providers marketing unlimited service and then imposing usage caps or throttling heavy users. Some ISPs have gone so far as to call heavy users bandwidth hogs. The controversy is not about an ISP’s right to set terms of use but rather misleading marketing. It is about calling a service unlimited then throttling or disconnecting a customer if they use it too much.

1.18 When “Always On” Doesn’t Mean “Always On”

Broadband service is marketed as “always on.” Exactly what this means is subject to interpretation. The most “on” service is a bridged or routed connection configured with a static IP address. Once service is configured connection is permanent and always available until the next time the ISP needs to reallocate IP addresses or power fails.

DHCP (Dynamic Host Configuration Protocol) assigns client an IPv4 address for a limited period called a lease at the client’s request. Before the lease expires client attempts to renew. As long as ISP continues to renew the lease the user is never disconnected. From customer’s perspective service is always on, lease renewal is transparent. Some ISPs bind IP address to hardware MAC address. The same IP address is assigned as long as customer does not change equipment. IPv6 uses a somewhat different mechanism DHCP-PD or Router Advertisement but the end result is the same, customer equipment is automatically configured by the ISP.

Point-to-Point-Protocol over Ethernet (PPPoE) or ATM (PPPoA) works like traditional PPP dialup. This type of service is common for ADSL. It leverages ISP investment in RADIUS authentication and billing equipment. Customer provides username/password to authenticate, once authenticated ISP issues an IP address. If connection becomes idle the user is disconnected. Most residential routers include a keep-alive mechanism so connection is never disconnected. From the user’s perspective the connection is always on as long as the ISP is able to maintain an active RADIUS log in session.

Some ISPs limit maximum connect time. After a certain number of hours connection is dropped and must be reestablished. This sort of behavior is common for dialup ISPs and WiFi Hotspots. When connection is dropped customer must log in again to regain internet access.

1.19 Security and Privacy

The internet is a rough and tumble place often likened to the Wild West. The power of worldwide connectivity means anyone on the planet with an internet connection is in a position to attack another connected computer. ISPs often block certain ports to reduce danger to unsophisticated users. Port blocking is a double edge sword as it may interfere with a customer’s legitimate use of the internet. Some ISP's go further acting as a firewall protecting customer from hostile attack and examining email for dangerous content or attachments. Some users consider this a great feature in the battle against spam and viruses. Others see it as an unwelcome intrusion in what should be individual control of network access.

The ISP is privy to all traffic that flows through its system. This raises two concerns, nosey ISPs and subpoenas. The ISP can monitor how customers use the internet, what sites they go to, what email they send and receive and in some cases even snoop usernames and passwords if they are sent in the clear. Even if the session itself is encrypted the to/from destinations have to be visible for the internet to function. Privacy concerns have been exacerbated recently with expanded government snooping due to war on terrorism. US government asked ISPs to provide information about customer internet usage without a court order and in most cases ISPs complied. Internationally governments are mandating ISPs retain customer traffic information for years. The EU has pretty stringent privacy policy but at the same time wants ISPs to maintain long term customer usage records to facilitate law enforcement.

ISP’s privacy policy determines how customer information is used and protected. It is reasonable to expect ISP to collect and use information for diagnostic purposes and to improve service. However, some ISPs sell or otherwise make use of customer’s browsing data, for example as a way to create targeted ads.

Popularity of wireless networks raises additional security concerns. In a wired network an attacker must physical connect to the network. With wireless an attacker is able to eavesdrop from some distance away. This is especially worrisome with WiFi hot spots since they are in public places and the integrity of owner is often unknown. When using public Hot Spots one should be careful accessing any resource over a wireless network where passwords are exchanged in the clear. Specifically email as POP/SMTP credentials are sent in the clear. If at all possible use SSL authentication to access email accounts. At home use WPA2 or WPA3 (wireless protected access) with a strong password to protect privacy. IPv6 addressing presents another possible security issue. One of the addressing schemes uses the 48-bit MAC address for the low order bits of the 128-bit IPv4 address. This means hard coded machine MAC address that is normally not visible outside the LAN in IPv4 becomes part of the pubic IP address and remains the same even when connected to a different ISP. A solution to this problem is to have the computer use a random number rather than the MAC address.

Another risk of wireless networks is the attacker is able to record a large number of sessions and then attempt to break encryption at their leisure.

1.20 Network Neutrality

As internet access becomes pervasive there is growing tension between ISP business practices and public policy. Network Neutrality proponents are concerned ISPs will created walled gardens and be in position to favor some companies and disadvantage others. Opponents of Network Neutrality argue ISPs ought to be able to do anything they want with their own network.

The reason I went into so much detail earlier about required and optional ISP services was to identify those services that only an ISP is able to deliver. Network Neutrality ought to insure network transparency is maintained, innovation encouraged and ISP is allowed to offer value add services while being prevented from acting as gatekeeper. The internet’s rapid rise in popularity is the result of its open architecture. Entrepreneurs need to be able to create new business models and interact with customers without requiring permission or cooperation of the network owner.

1.21 Finding an ISP

It can be difficult finding information about local ISPs. First step is contacting your town’s Cable franchise and incumbent telephone company. Many states are participating in the FCC national broadband mapping program to determine broadband availability. In NH the program is called cleverly enough: NH broadband mapping and planning program. NHBMI is working to deliver more accurate and detailed data on a town by town basis and has a speed test to record actual customer speed. The ease of use and data quality varies a lot by state.

2 Dialup - Plain Old Telephone Service

Dialup has come a long way from Bell 103 acoustic modem (circa 1962) operating at 300 bps to current crop of V.90/92 modems (circa 1998) capable of over 50,000 bps. Dialup internet access is available anywhere there is telephone service. It will even work on cellular at very low speed in a pinch. Dialup was extremely popular in the 1980s – 90s and was the introduction for many folks to the internet and prior to that local BBSes (bulletin board systems). Due to the heavy graphics content of web sites today it is painfully slow by current standards. Almost all Dialup ISPs support ITU-T V.90/92 standard. V.90 modems deliver up to 56 kbps (download) over the PSTN. In the US FCC power limitation reduces maximum speed to 53 kbps. V.90 transmission from subscriber to ISP (upload) uses V.34 mode limiting maximum upload speed to 33.6 kbps. If modem cannot connect in V.90 mode it automatically falls back to V.34 mode in both directions with a maximum speed of 33.6 kbps.

V.92 is a minor enhancement to V.90. Upload speed is increased slightly to 48 kbps and implements faster auto negotiation to reduce call setup time. V.44 improves compression of reference test data to 6:1 vs 4:1 with V.90. Compression increases apparent speed because it reduces the number of bits transmitted over slow telephone network. Modem on Hold (MOH) allows modem to park a data session allowing user to answer a short incoming call. This works in conjunction with Phone Company Call Waiting feature and requires support from the ISP.

|

Figure 10 V.90/92 Dialup Modem End-to-End |

V.90/92 requires ISP modem connect to phone company digital trunk. Only a single digital to analog conversion can exist between ISP and user. Phone lines are analog between customer and central office or remote terminal. At that point they are digitized at 64 kbps. This means POTS modem technology has reached its theoretical maximum speed. To obtain higher speed requires use of different technology.

At connect time modem probes phone line to determine noise and attenuation characteristics in order to set initial connect speed. Speed is constantly adjusted in response to varying line conditions. To obtain maximum speed V.90 and V. 92 modems require phone circuit that exceeds minimum FCC requirements.

2.1 Dial Up Networking

DUN (Dialup networking) is used to establish an internet connection. The most common method used to traverse the telephone network is via PPP (Point-to-Point Protocol). PPP allows internet protocol (IP) packets to traverse the serial point-to-point telephone link between user and ISP. DUN automatically dials ISP phone number, waits for remote modem to connect and establishes a PPP session. The ISP performs user authentication and assigns an IP address. DUN monitors the connection and notifies user when it disconnects.

2.2 Session Duration

Dialup ISP business model assumes customer will stay connected for relatively short periods of time. To enforce this most dialup ISP’s automatically disconnect customer when time limit is reached. Session will also be dropped due to extended inactivity.

2.3 Multilink

In the quest for higher speed some dialup ISPs support Multilink. Multilink binds two dialup links into a single faster connection. If customer typically connects at say 44 kbps multilink doubles speed to 88 kbps. Multilink requires two modems; two phone lines, and an ISP that supports it. Where available it is a useful technique to obtain better performance from dialup.

![]() Software at each end

of the link splits data between each connection effectively doubling speed.

Unfortunately because data is still traveling over low speed dialup multilink

does not improve latency.

Software at each end

of the link splits data between each connection effectively doubling speed.

Unfortunately because data is still traveling over low speed dialup multilink

does not improve latency.

Multilink is also used with ISDN to bind the two bearer channels together yielding a 128 kbps connection.

2.4 Impairments

2.4.1 Slower Than Expected Speed

Modem data connection is more demanding than voice. There are many reasons for slow dialup even though phone sounds normal. Dialup modem impairments are discussed at length in a separate paper.

2.4.2 Call Waiting

Call waiting generates an alert tone to inform the user someone else it attempting to call. The call waiting process interferes with an existing data call. Call waiting can be temporally disabled at the beginning of a call. The sequence varies by locale, in our area it is *70. Unfortunately sending the disable sequence to a line not equipped with call waiting is interpreted as part of the dialed number, resulting in an incorrect connection. This is a problem if the modem uses multiple lines and not all are equipped with Call Waiting.

2.4.3 Shared Phone Line

If dialup modem shares a phone line with telephone or fax machine there is possibility of mutual interference. If modem is in use picking up a phone will cause modem to disconnect. Conversely if phone is in use modem may attempt to connect interfering with call. One can use a privacy device that monitors phone line voltage to prevent this. When phone is idle open circuit voltage is high around 48 volts, when a phone/modem is in use voltage drops to less than 10 volts. Privacy adapters measure line voltage to prevent phone use if a call is already in place. There are a couple of inconvenient side effects to this approach. Privacy device prevents calls being transferred from one phone to another and it confuses the line use indicators built into many phones. I designed a Modem Access Adapter to prevent interference when modem and phone share the same line.

2.5 Installation

Other than requiring a V.90/92 modem there is no installation. PCs, especially laptops, used to include a built in dialup modem. With the advent of Ethernet and WiFi that is typically no longer the case and will need to purchase a dialup modem and connect it to the phone line. Once that is done create a DUN profile and log into the ISP.

2.6 Life in the Slow Lane

Dialup has the advantage of being accessible anywhere there is a landline phone. It can even be shared by multiple users on a home LAN. For several years in the late 90’s I shared a dialup connection on our home LAN, first using a connection sharing program and later a router. The problem with dialup is its incredibly low speed compared to other forms of internet access. A couple of decades ago, before web sites became so graphics intensive and software programs become chatty and need multi megabyte patches, dialup worked surprising well for our family. Today it is excruciating slow.

3 T-1 and E-1 Digital Carrier

The US Bell System developed T-1 digital carrier during the early 60’s to reduce interoffice transmission cost. Prior to T-1 analog frequency division multiplexing (FDM) was used to carry voice traffic between telephone switching centers. FDM carrier used a 4-wire circuit to carry 24 voice channels, one pair in each direction. T-1 was designed to also carry 24 voice channels, facilitating transition from FDM to TDM. E-1 digital carrier, used in Europe, is similar transporting 30 voice channels. Each voice channel is digitized resulting in a 64 kbps data rate. 24 channels require 1.536 Mbps plus an 8 kbps control channel resulting in data rate of 1.544 Mbps (E1 is 2.048 Mbps). T-1 has a DS-1 channel speed of 1.544 Mbps and is carried over a 4-wire copper facility. Popular usage has corrupted this distinction. T-1 is now commonly used to mean any 1.544 Mbps service.

In the early 1980’s T-1 was tariffed and made available to customers. T-1 continues to be used in commercial service carrying both voice and data but has fallen out of favor due to high price and low bandwidth.

3.1 Converting Voice to Digital Bits

Voice grade phone service occupies the frequency band of 300-3000 Hz. Low frequencies are suppressed to minimize interference from 50/60 Hz power lines. Increasing upper frequency beyond 3000 Hz does little to improve intelligibility, at the expense of greater bandwidth. Digital sampling must be performed at least twice the highest frequency of interest to recover the original analog signal. Engineers chose a sample rate of 8,000 times a second. It was found sampling to 12-bits, resulting in 4096 possible values, produced excellent voice quality. This required 96 kbps per channel resulting in a composite data rate that exceeded what 1960s technology could deliver. To reduce data rate engineers decided to use only 8-bits or 256 values per sample, resulting in a 64 kbps data stream. To minimize quality degradation, conversion is performed logarithmically. When sound level is low samples are close together. During loud passages samples are farther apart. This masks quantizing noise generated by the conversion process. Two slightly different methods are used, µ-law in US and A-law in Europe. The resulting digital signal is called Pulse Code Modulation (PCM). 24 phone calls in US (T-1) or 30 Europe (E-1) are interleaved using Time Division Multiplexing TDM combined with an 8 kbps signaling channel the composite data stream is 1.544 Mbps (US) or 2.048 Mbps Europe.

PCM coding scheme developed for T-1 is what makes V.90 and V.92 dialup modems possible and also the reason dialup is limited to 56 kbps.

3.2 Channelized vs. Unchannelized

When used for internet access voice channelization is neither required nor desired. In that case T-1 data circuits are unchannelized exposing total channel capacity to the IP layer. IP, rather than T-1, performs multiplexing. Some circuits are provisioned to allow flexible control of channelization. This allows an Integrated Access Device (IAD) to dynamically allocate bandwidth between voice and data.

3.3 Provisioning

The original implementation of T-1 required regenerators spaced every 6,000 feet. Regenerators recreate bipolar signals, allowing T-1 to deliver very low error rates compared to analog carrier. Regenerators can be powered from the T-1 line, called a span, eliminating need for local power. T-1 bipolar signaling is relatively noisy. This requires care during circuit provisioning to prevent interference between T-1 and other services, including other T-1s and DSL in the same cable.

Early T1 required a 4-wire circuit, 1-pair in each direction. Newer T1 deployments using HDSL2 only need a single pair. Digital signal processing techniques similar to that used with DSL reduce outside plant cable requirement and increases distance between regenerators.

4-wire T-1 circuit can be up to 50 miles, with regenerator every mile. Very long T-1 circuits are rare nowadays as fiber is more cost effective.

3.4 CSU and DSU

Channel Service Unit (CSU) is connected directly to the 4-wire facility. The CSU regenerates T-1 bipolar signals before handing them off to Data Service Unit (DSU). The CSU provides keep alive and Loopback testing enabling Telco to monitor line quality.

T-1 uses bipolar plus and minus 3-volt pulses, between pulses line voltage returns to zero. The Digital Service Unit (DSU) converts bipolar signals to a synchronous interface such as V.35 using both RS232 single ended and RS422 differential signaling to connect to customer equipment.

In the US CSU and DSU are built into customer premise equipment (CPE), such as a T-1 router. In the rest of the world CSU is owned by service provider, CPE includes only the DSU.

3.5 Smartjack

When T-1 was developed the interface between CSU and

DSU, called DSX-1, was designated the demarcation point between Telco and

customer. DSX-1 is still the demarcation point in rest of the world. During US

deregulation the FCC defined the 4-wire facility as the demarcation point. This

caused problems for service providers as now management and quality assurance

functions were no longer under their control but provided by customer premise

equipment (CPE).

When T-1 was developed the interface between CSU and

DSU, called DSX-1, was designated the demarcation point between Telco and

customer. DSX-1 is still the demarcation point in rest of the world. During US

deregulation the FCC defined the 4-wire facility as the demarcation point. This

caused problems for service providers as now management and quality assurance

functions were no longer under their control but provided by customer premise

equipment (CPE).

![]() The solution was

the Smartjack. It presents a 4-wire (2-pair) interface to customer and

implements service provider Loopback test function. This allows Telco to

perform testing and maintenance functions while complying with FCC regulations.

The solution was

the Smartjack. It presents a 4-wire (2-pair) interface to customer and

implements service provider Loopback test function. This allows Telco to

perform testing and maintenance functions while complying with FCC regulations.

Smartjacks can be also used to deliver T-1 service to customers by converting other transmission schemes to traditional 2-pair T-1 such as fiber.

3.6 Installation

The service provider will typically install the Smartjack within a few hundred feet of where drop cable enters the building. Customer needs to purchase a router and install it. The cable between CPE and Smartjack is a regular Category rated patch cable.

3.7 Beyond T-1

The wired telephone network is almost entirely digital except for the 2-wire analog POTS customer loop. With digital technology multiple voice channels can easily be carried over a single circuit. The digital carrier hierarchy is based on voice channels. The lowest level, called Digital Service 0 (DS-0), is a single PCM digitized voice circuit of 64 kbps. Next is DS-1 (24 voice circuits over T-1 carrier) operating at 1.544 Mbps, then DS-2 (T-2) operating at 6.312 Mbps equivalent to 4 T-1 circuits, then DS-3 (T-3) at 44.736 Mbps equivalent to 28 T-1 circuits.

Higher speed is optical using SONET (Synchronous Optical Network) and ITU SDH (Synchronous Digital Hierarchy). Optical Carrier 1 (OC-1) and Synchronous Transport Signal Level 1 (STS-1) operate at 51.84 Mbps, next is STS-3 (OC-3) 155.52 Mbps, then STS-12 (OC-12) operating at 622.08 Mbps and so forth. Beginning with STS3 hierarchy increases by a factor of four at each step. 10G bps STS-192 (OC-192) is an interesting convergence point. It is the first time Ethernet and SONET/STS operate at the same speed opening the door for Ethernet being carried directly over SONET.

|

Telco Digital Carrier Hierarchy |

||

|

Line Rate |

Designation |

Notes |

|

639.009 Gbps |

OC-12288 STM-4096 |

|

|

159.252 Gbps |

OC-3072 STM-1024 |

|

|

100 Gbps |

|

100 Gig Ethernet (not part of digital hierarchy) |

|

39.812 Gbps |

OC-768 STM-256 |

Telco convergence 40 Gig Ethernet |

|

10 Gbps |

|

10 Gig Ethernet (not part of digital hierarchy) |

|

9.953 Gbps |

OC-192 STM-64 |

Telco convergence 10 Gig Ethernet 10G-PON/EPON down |

|

4.977 Gbps |

OC-96 |

10G-PON up |

|

2.5 Gbps |

|

2.5 Gig Ethernet (not part of digital hierarchy) |

|

2.488 Gbps |

OC-48 STM-16 |

G-PON Down |

|

1.244 Gbps |

OC-24 |

G-PON Up, E-PON dn/up, 10G-EPON up |

|

1 Gbps |

|

Gig Ethernet (not part of digital hierarchy) |

|

622.080 Mbps |

OC-12 STM-4 |

B-PON Downstream |

|

155.520 Mbps |

OC-3 STS-1 |

B-PON Upstream |

|

100 Mbps |

|

Fast Ethernet (not part of digital hierarchy) |

|

51.840 Mbps |

OC-1 |

Base of the optical hierarchy |

|

44.736 Mbps |

T-3 DS-3 N.A. |

|

|

34.368 Mbps |

E-3 Europe |

|

|

10.000 Mbps |

|

Ethernet (not part of digital hierarchy) |

|

8.448 Mbps |

E-2 Europe |

|

|

6.312 Mbps |

T-2 DS-2 N. A. |

|

|

2.048 Mbps |

E-1 DS-1 Europe |

30 DS-0 voice channels |

|

1.544 Mbps |

T-1 DS-1 N. A. |

24 DS-0 voice channels, Primary Rate ISDN |

|

144 kbps |

Basic Rate ISDN |

2B (DS-0 bearer channels) + D (16 kbps data channel) |

|

64 kbps |

Digital Signal-0 |

Single 64kbps PCM voice channel |

4 ISDN - Integrated Service Digital Network

![]() Tremendous

success of T1/E1 prompted the Telephone industry to look for a way to deliver

high-speed digital service directly to customer. Integrated Service Digital

Network (ISDN) was

supposed to be the next big thing poised to revolutionize the telephone

industry. Alas things have not played out that way. Deployment missteps and

high cost have relegated ISDN as a technology footnote.

Tremendous

success of T1/E1 prompted the Telephone industry to look for a way to deliver

high-speed digital service directly to customer. Integrated Service Digital

Network (ISDN) was

supposed to be the next big thing poised to revolutionize the telephone

industry. Alas things have not played out that way. Deployment missteps and

high cost have relegated ISDN as a technology footnote.

Basic rate ISDN provides two 64 kbps bearer channels (B channels), and a 16 kbps data control channel (D channel) over a single voice grade copper loop. Primary Rate ISDN is basically a T-1 connection. ISDN is a circuit switched technology with very fast call setup time. Being digital full 64 kbps is available. ISDN requires a Terminal Adapter (TA). The TA connects to ISDN copper loop, provides two POTS analog phone lines, and a serial data connection.

4.1 Dial Up Networking

ISDN is a circuit switched technology. To access the internet a phone call is made to the ISP, just as with analog dialup. Once connected access speed is 64 kbps due to the end-to-end digital nature of the connection. If the ISP offers multilink the second channel can be bonded to create a 128 kbps link. Extra channel can be automatically torn down and set up as needed to free up capacity for voice call.